Live

- Hyderabad property registrations rise 14% to Rs 3,617 cr in Oct

- Release funds for temples located in Boath constituency: Cong leaders

- Rs 37.81 crore to develop Shringaverpur Dham in UP

- PM Modi should order arrest of Adani: CPI

- Anarkali suits for everyday elegance

- HMWSSB ED inspects Khajakunta STP

- Bhumi Pednekar: Became an actor in a time where the way I looked was secondary

- PM Modi meets 31 global leaders during 3-nation tour

- Sneha Wagh says she couldn’t recognise herself in divine avatar

- Study shows why you regain lost weight

Just In

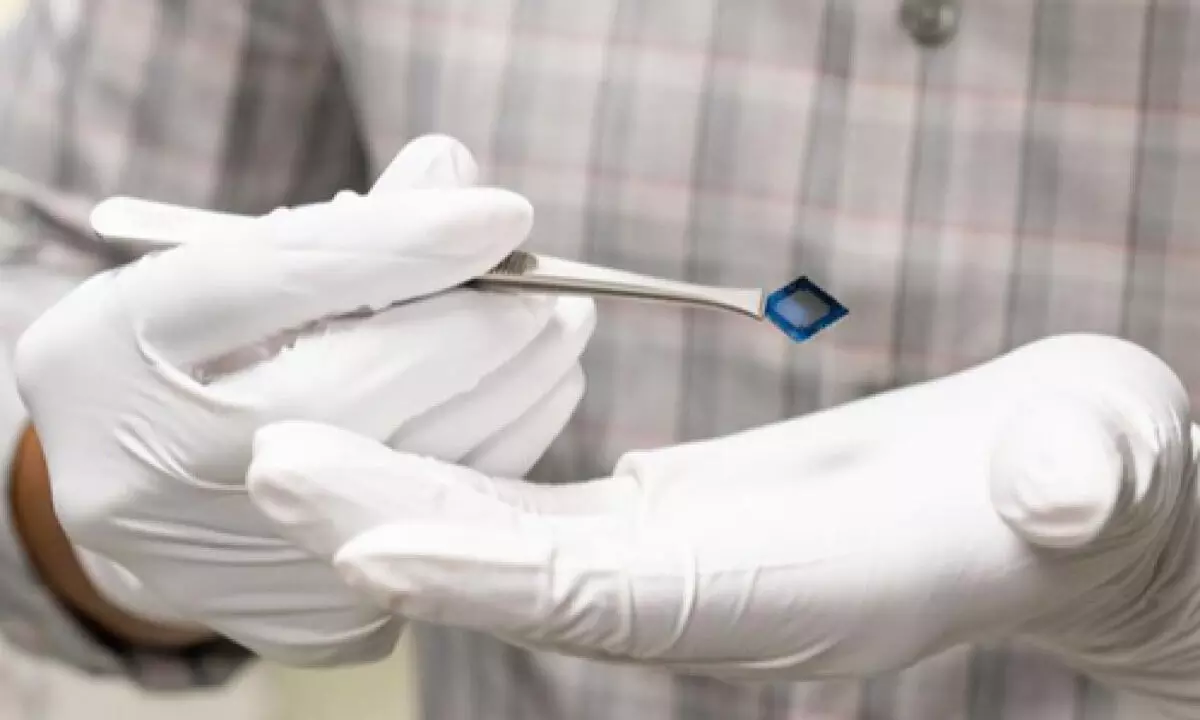

Artificial multisensory neuron to make AI smarter developed

Researchers led by an Indian-origin scientist in the US have harnessed the biological concept for application in artificial intelligence (AI) to develop the first artificial, multisensory integrated neuron that processes visual and tactile input together.

New Delhi: Researchers led by an Indian-origin scientist in the US have harnessed the biological concept for application in artificial intelligence (AI) to develop the first artificial, multisensory integrated neuron that processes visual and tactile input together.

Led by Saptarshi Das, associate professor of engineering science and mechanics at Penn State, the team published their work in the journal Nature Communication.

“Robots make decisions based on the environment they are in, but their sensors do not generally talk to each other,” said Das.

“A collective decision can be made through a sensor processing unit, but is that the most efficient or effective method? In the human brain, one sense can influence another and allow the person to better judge a situation,” Das added.

The team focused on integrating a tactile sensor and a visual sensor so that the output of one sensor modifies the other, with the help of visual memory.

The sensor generates electrical spikes in a manner reminiscent of neurons processing information, allowing it to integrate both visual and tactile cues.

The team found that the sensory response of the neuron -- simulated as electrical output -- increased when both visual and tactile signals were weak.

Das explained that an artificial multisensory neuron system could enhance sensor technology’s efficiency, paving the way for more eco-friendly AI uses. As a result, robots, drones and self-driving vehicles could navigate their environment more effectively while using less energy.

“The super additive summation of weak visual and tactile cues is the key accomplishment of our research,” said co-author Andrew Pannone, a fourth-year doctoral student in engineering science and mechanics. Harikrishnan Ravichandran, a fourth-year doctoral student in engineering science and mechanics at Penn State, also co-authored this paper.

© 2024 Hyderabad Media House Limited/The Hans India. All rights reserved. Powered by hocalwire.com