Biased AI can hurt minority people

Bad data does not only produce bad outcomes. It can also help to suppress sections of society, for instance vulnerable women and minorities. This is the argument of my new book on the relationship between various forms of racism and sexism and artificial intelligence (AI).

London: Bad data does not only produce bad outcomes. It can also help to suppress sections of society, for instance vulnerable women and minorities. This is the argument of my new book on the relationship between various forms of racism and sexism and artificial intelligence (AI).

Algorithms generally need to be exposed to data – often taken from the internet – in order to improve at whatever they do, such as screening job applications, or underwriting mortgages. But the training data often contains many of the biases that exist in the real world. For example, algorithms could learn that most people in a particular job role are male and, therefore, favour men in job applications. Our data is polluted by a set of myths from the age of “enlightenment”, including biases that lead to discrimination based on gender and sexual identity.

Judging from the history in societies where racism has played a role in establishing the social and political order, extending privileges to white males –- in Europe, North America and Australia, for instance –- it is simple science to assume that residues of racist discrimination feed into our technology. In my research for the book, I have documented some prominent examples.

Some examples

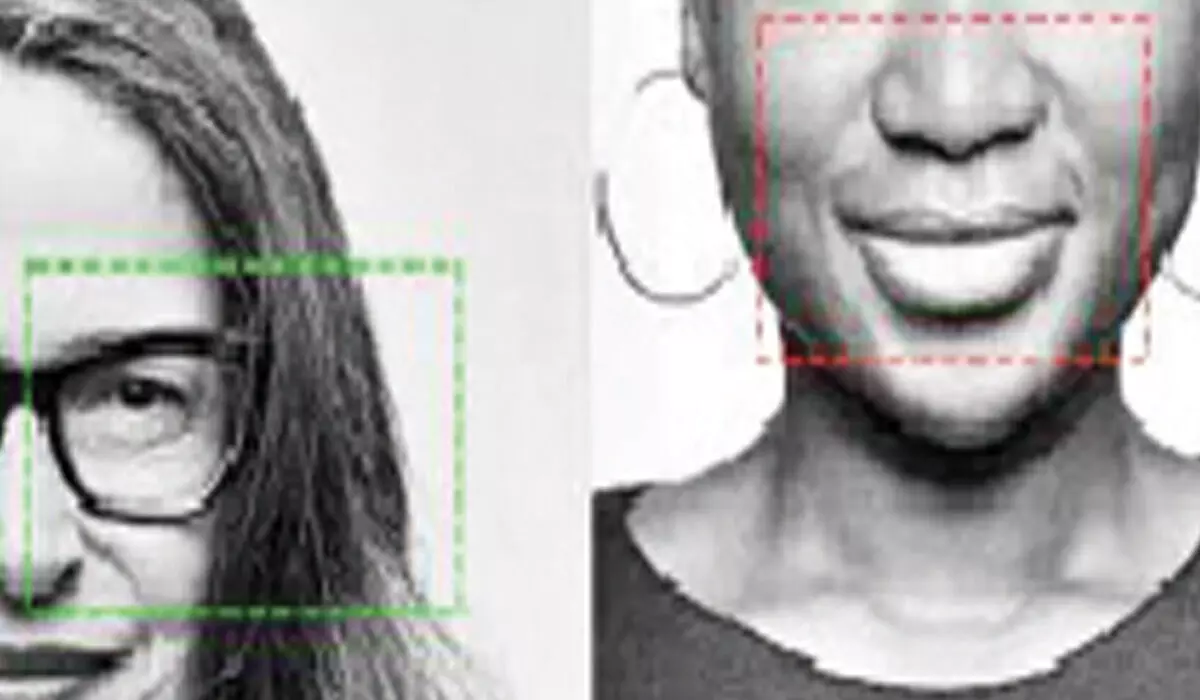

Face recognition software more commonly misidentified black and Asian minorities, leading to false arrests in the US and elsewhere. Software used in the criminal justice system has predicted that black offenders would have higher recidivism rates than they did. There have been false healthcare decisions. A study found that of the black and white patients assigned the same health risk score by an algorithm used in US health management, the black patients were often sicker than their white counterparts. This reduced the number of black patients identified for extra care by more than half. Because less money was spent on black patients who have the same level of need as white ones, the algorithm falsely concluded that black patients were healthier than equally sick white patients.

Denial of mortgages for minority populations is facilitated by biased data sets. The list goes on. Machines don't lie? Such oppressive algorithms intrude on almost every area of our lives. AI is making matters worse, as it is sold to us as essentially unbiased. We are told that machines don't lie. Therefore, the logic goes, no one is to blame. This pseudo-objectiveness is central to the AI-hype created by the Silicon Valley tech giants. It is easily discernible from the speeches of Elon Musk, Mark Zuckerberg and Bill Gates, even if now and then they warn us about the projects that they themselves are responsible for.

Accountability

Could someone claim compensation for an algorithm denying them parole based on their ethnic background in the same way that one might for a toaster that exploded in a kitchen? The opaque nature of AI technology poses serious challenges to legal systems which have been built around individual or human accountability. On a more fundamental level, basic human rights are threatened, as legal accountability is blurred by the maze of technology placed between perpetrators and the various forms of discrimination that can be conveniently blamed on the machine. Racism has always been a systematic strategy to order society. It builds, legitimises and enforces hierarchies between the haves and have nots.

Ethical and legal

vacuum

Blindfolded by the mistakes of the past, we have entered a wild west without any sheriffs to police the violence of the digital world that's enveloping our everyday lives. The tragedies are already happening on a daily basis. It is time to counter the ethical, political and social costs with a concerted social movement in support of legislation. The first step is to educate ourselves about what is happening right now, as our lives will never be the same. It is our responsibility to plan the course of action for this new AI future. Only in this way can a good use of AI be codified in local, national and global institutions.

(The Conversation;

Writer is Professor in Global Thought and Comparative Philosophies, SOAS, University of London)