Facebook cracks down on groups spreading harmful information

Facebook said Wednesday it is rolling out a wide range of updates aimed at combatting the spread of false and harmful information on the social media site — stepping up the company’s fight against misinformation and hate speech as it faces growing outside pressure.

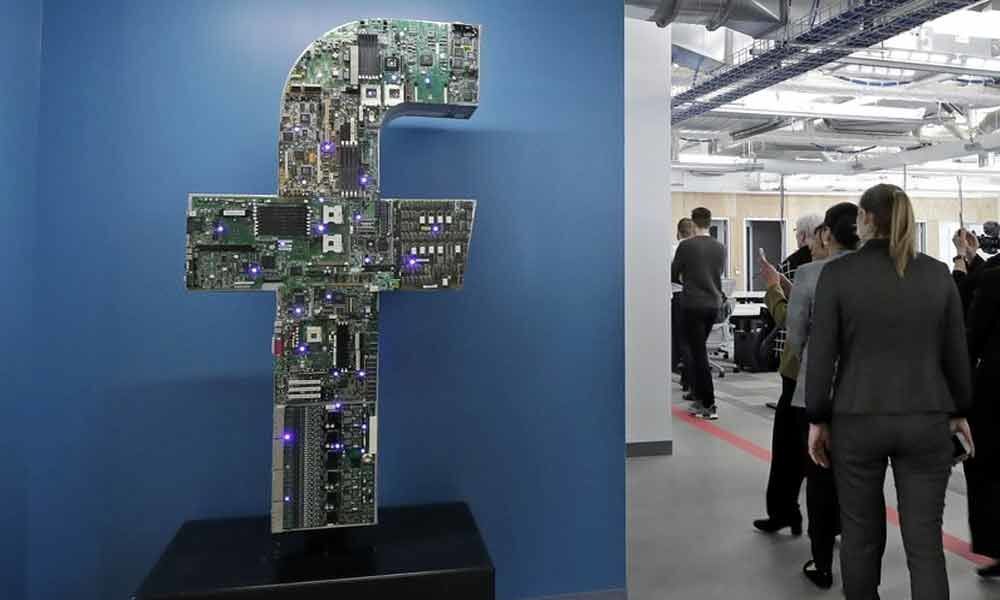

MENLO PARK, Calif: Facebook said Wednesday it is rolling out a wide range of updates aimed at combatting the spread of false and harmful information on the social media site — stepping up the company's fight against misinformation and hate speech as it faces growing outside pressure.

The updates will limit the visibility of links found to be significantly more prominent on Facebook than across the web as a whole, suggesting they may be clickbait or misleading. The company is also expanding its fact-checking program with outside expert sources, including The Associated Press, to vet videos and other material posted on Facebook.

Facebook groups — the online communities that many point to as lightning rods for the spread of fake information — will also be more closely monitored. If they are found to be spreading misinformation, their visibility in users' news feeds will be limited.

Lawmakers and human rights groups have been critical of the company for the spread of extremism and misinformation on its flagship site and on Instagram.

During a hearing Tuesday on the spread of white nationalism, congress members questioned a company representative about how Facebook prevents violent material from being uploaded and shared on the site.

In a separate Senate subcommittee hearing Wednesday, the company was asked about allegations that social media companies are biased against conservatives.

The dual hearings illustrate the tricky line that Facebook, and other social media sites such as Twitter and YouTube, are walking as they work to weed out problematic and harmful materials while also avoiding what could be construed as censorship.

CEO Mark Zuckerberg's latest vision for Facebook, with an emphasis on private, encrypted messaging , is sure to pose a challenge for the company when it comes to removing problematic material.

Guy Rosen, Facebook's vice president of integrity, acknowledged the challenge in a meeting with reporters at Facebook's Menlo Park, California, headquarters Wednesday. He said striking a balance between protecting people's privacy and public safety is "something societies have been grappling for centuries."

Rosen said the company is focused on making sure it does the best job possible "as Facebook evolves toward private communications." But he offered no specifics.

"This is something we are going to be working on, working with experts outside the company," he said, adding that the aim is "to make sure we make really informed decisions as we go into this process."

Facebook already has teams in place to monitor the site for material that breaks the company's policies against information that is overtly sexual, incites violence or is hate speech.

Karen Courington, who works on product-support operations at Facebook, said half of the 30,000 workers in the company's "safety and security" teams are focused on content review. She said those content moderators are a mix of Facebook employees and contractors, but she declined to give a percentage breakdown.

Facebook has received criticism for the environment the content reviewers work in. They are exposed to posts, photos and videos that represent the worst of humanity and have to decide what to take down and what to leave up in minutes, if not seconds.

Courington said these workers receive 80 hours of training before they start their jobs and "additional support," including psychological resources. She also said they are paid above the "industry standard" for these jobs but did not give numbers.

It's also not clear if the workers have options to move into other jobs if the content-review work proves psychologically difficult or damaging.

Even with moderators for material that clearly goes against Facebook's policies, the company is still left with the job of dealing with information that falls into a more gray area — that is not breaking the rules but would be considered offensive by most or is false.

Facebook and other social media companies have long tried to avoid seeming like content editors and "arbiters of truth," so they often err on the side of leaving material up, if less visible, if it is in the gray areas.

But if Facebook knows information is wrong, why not remove it? That's a question posed by Paul Barrett, deputy director at the New York University Stern Center for Business and Human Rights.

"Making a distinction between demoting (material) and removing it seems to us to be a curious hesitation," he said.

He acknowledged, however, that even if Facebook did remove information more aggressively, the site would never be perfect.

"There's a tension here," said Tessa Lyons, Facebook's head of News Feed. "We work hard to find the right balance between encouraging free expression and promoting a safe and authentic community, and we believe that down-ranking inauthentic content strikes that balance."

The company said Wednesday it would share more of its misinformation issues with experts and seek their advice.

Facebook faces a tough challenge, said Filippo Menczer, a professor of informatics and computer science at Indiana University. He said he's glad to see the company consult journalists, researchers and other experts on fact-checking. Menczer has spoken with the company on the issue of misinformation a couple times recently.

"The fact that they are saying they want to engage the broader research community, to me is a step in the right direction," he said.